What Is Robotic Vision?

While robots and vision technologies have existed for years, combining them creates a dynamic duo that significantly enhances a robot’s capabilities. According to the Society of Manufacturing Engineers’ Machine Vision Division, robot vision refers to devices that automatically receive and process images of real objects through optical devices and non-contact sensors. This definition goes beyond just cameras—LiDAR sensors, for example, also fit within this scope. In essence, machine vision allows robots to replicate human visual capabilities, enabling them to take on complex, tedious, and critical tasks in manufacturing and beyond.

At its core, robot vision gives robots the ability to perceive and interpret their surroundings through visual data, typically collected via cameras or image sensors. This field blends elements of computer vision, machine learning, and robotics to enable essential functions like object recognition, navigation, and manipulation in dynamic environments. Robot vision typically involves key steps like image acquisition—using 2D, 3D, or depth cameras to capture information—followed by processing and analysis through advanced algorithms, including deep learning, to identify objects or actions. Additionally, robots often combine vision systems with other sensors, like LiDAR, to create detailed maps and determine their position using SLAM (Simultaneous Localization and Mapping). Robot vision applications span across industries, from autonomous vehicles and drones to industrial robots and service robots, driving innovations like quality control, autonomous navigation, and object handling.

Components of Robotic Vision

Robotic vision needs hardware for data acquisition and software for processing.

Hardware

The hardware component comprises these parts.

- Lens: Captures the images and controls the amount of light it presents as the image to the sensor

- Image Sensor: Has millions of light-sensitive pixels for converting the captured light into an electrical signal to feed to the chip for processing (the more pixels present in the sensor, the clearer the image)

- Image Acquisition Card: Houses the required hardware to convert the electrical signal from the sensor into a digital image, including the chip

- I/O Units: These hardware components (sensor and card) must communicate with each other to transmit the signal data, and this usually happens via serial communication.

- Control Devices: Just like a human eye moves to view different places, these vision systems need control devices to adjust the camera to get a better field of view (if it is a humanoid robot, the head should also be controllable to change the field of vision)

- Lighting: Cameras are negatively affected by poor lighting, so this component is necessary to improve visibility in low-light conditions.

The image sensor and lens form the camera in the vision system, and its quality depends on the pixel count. General hardware quality, communication, and coordination are also important in making this system operate seamlessly.

Advanced robot vision systems incorporate sensor fusion to get a better understanding of the surrounding environment, so some can have lidar, radar, and ultrasonic sensors to improve vision.

Another hardware component that can enhance vision is a 3D vision sensor (stereo vision) because it gives high distance-measurement accuracy of the objects ahead.

Software

The software in the image acquisition card is responsible for decoding the electrical signal from the sensor to a digital image and determining the situation ahead. Besides image and signal processing, the other computer algorithms are powered by AI.

As I stated earlier, machine or robot vision tries to mimic humans, so the best way to get meaningful information from the captured image is by using intelligence like a human would, but it is artificial in this case.

These AI algorithms comprise the following.

- Semantic Segmentation: This deep learning algorithm segments the RGB images and associates a category or label with each pixel in the image for fine perception.

- Semantic Recognition: Intelligently identifies object categories and people from the image pixels to understand the environment ahead.

- Semantic SLAM: SLAM is an acronym for Simultaneous Localization and Mapping, a deep learning algorithm that builds environmental maps using semantic information for self-positioning.

Panoramic Review of the Machine Vision Industry Chain

This vision-guided robotics ecosystem or industry chain is split into three levels.

Upstream (Suppliers)

The upstream has the hardware and software providers that make this robot vision system work. Hardware providers bring in the industrial camera, image capture card, image processor, light source equipment (LED), lens, optical ballast, accessories, etc.

On the other hand, software providers supply machine vision software and algorithms, which include vision processing software, algorithm platforms and their libraries, image processing software, etc.

Mid-Stream (Robotic Equipment Manufacturing and System Integration)

Mid-stream manufacturing merges these hardware and software components to form equipment for visual guidance, recognition, measurement, and inspection. These can be further integrated to form ready-to-use solutions for robot guidance, safety inspection, quality inspection, and more.

Downstream (Applications)

These solutions can be implemented in various industries, such as electronics, semiconductor, food, beverage, and flat panel display manufacturing for safety and quality inspection, and in automobiles for self-driving and driver assistance capabilities, as well as automated battery charging.

How Does Robotic Vision Work?

Before the robot is deployed into the industry operating floor, it must be trained to identify objects. With this part sorted, here’s how the system works. We’ll consider an industrial robot that is normally in a waiting or standby state.

- The workpiece positioning detector is always on and senses when an object moves near the center of the camera’s field of view. Once detected, it sends an activation signal or triggers a pulse to the image acquisition card.

- This card sends a start pulse to the camera and lighting system to initiate according to the pre-set program and delay.

- If the camera is active, it stops the current scan and starts a new frame scan. But if it is on standby, the start pulse triggers it to initiate frame scanning. The vision camera turns on the exposure mechanism before scanning the frame, and you can configure the exposure time in advance to control frame scanning.

- The other start pulse turns on the LED lighting and the card ensures the time the light is on matches the camera’s exposure time.

- After exposure, image frame scanning and output start, producing an analog video signal from the image sensor.

- The image acquisition card converts the analog signal into digital. But if the camera is digital, this step is unnecessary. The card then stores the digital image in the computer’s memory.

- A processor in the card analyzes the image for identification using AI algorithms to obtain values like X, Y, and Z measurements or logical control values.

- The results from this image processing are sent to the assembly line control unit, which performs any corrective action if necessary. Typical actions include positioning and motion adjustments.

Robot Vision vs. Computer Vision: What’s The Difference?

A thin line separates these two. Computer vision is more of a general term that covers both robot vision and machine vision, and it involves extracting information from images to make sense of the pixel data or objects on it. In a nutshell, computer vision is all about object detection on images.

But robot vision is a subset of computer vision that focuses on engineering and science domains (computer vision is more of a research domain). Therefore, robotic vision must incorporate other algorithms and techniques to make the robot physically interact with its surrounding environment. For instance, kinematics and reference frame calibration enable the robot to move, pick objects, and avoid obstacles in its surroundings.

Machine vision is often interchangeably used with robot vision, but it is slightly different. As the other subset of computer vision, this engineering domain refers to the industrial use of vision to handle specific applications, such as process control, automated inspection, and robot guidance (that particular function).

A machine vision system for quality inspection

Uses of Robot Vision

Since robot vision covers all the vision functions that the eyes of the robot can do, it provides these individual machine vision capabilities.

- Image Recognition: This use-case mostly applies to QR codes and barcodes, which help to enhance production efficiency

- Image Detection: Functions like color comparison and positioning during printing and product quality inspection rely on image detection

- Visual Positioning: This machine vision application helps the robot to find the positions of detected objects for picking or moving, such as during packaging

- Object Sorting: Robots can also sort the captured, recognized, and processed images, which helps to split products according to grades, sizes, or defects

- Object Measurement: This is a non-contact application that helps to measure or count gears, connector pins, automotive components, etc. It prevents the secondary damage that might be caused by contact-based measurement.

Why Use AI in Robotic Vision?

AI or machine learning introduces intelligence in pattern and object detection in robotic vision, which gives these benefits.

Enhances Flexible Manufacturing

With intelligence and training built-in, robots can adapt to different lighting conditions, positions, and environments, while still operating accurately. For instance, a robot trained to handle color detection will provide higher accuracies and detection rates when detecting colors if there are differences in other operating variables, such as depth and lighting.

AI also makes it possible to learn from past mistakes and self-calibrate to enhance efficiency. Humans can point out mistakes it has carried out as well for reinforced learning to calibrate the system faster.

However, AI vision accuracy still has its limitations because it has an error rate of about 15%. Therefore, manual re-inspection is necessary to ensure high-quality standards are met. This can increase production costs significantly. But as the technology matures, the error rate is expected to reduce and if it gets below the 5% threshold, AI and robot/machine vision might not need manual re-inspection.

Improves Industrial Robotic Capabilities

Machine learning can also enhance the anti-interference and error compensation capabilities of the industrial robot. For instance, when polishing a car’s surface, the process must follow a fixed procedure when using traditional robots. If there’s human interference, the vehicle might exit the paint shop with defects. But AI enables the robot to sense these changes and compensate for any errors to make the polishing process achieve the required results.

The robot can also automatically optimize this production process by self-recalibration to enhance efficiency. When paired with IoT, AI can use sensor data for big data analysis to make the production line as efficient as possible.

These intelligent robots are reusable as well because certain tasks or programs can be applied to several processes on the manufacturing floor. For instance, the polishing robot can polish cars, bikes, and other products because the surface quality finish is the most important factor to consider. So you don’t have to reprogram these robots to do these tasks.

Trends in Robotic Vision

- 3D Imaging: 3D vision enables robots to identify and pick randomly placed parts in large quantities to optimize picking operation efficiency.

- Hyperspectral Imaging: This imaging makes it possible to analyze chemical materials by checking variables like color to visualize molecular structures in different materials. It can also monitor defects or detect impurities, such as plastics during meat production by checking the color.

- Thermal Imaging for Industrial Inspection: Thermal imaging can be paired with regular cameras to provide a holistic inspection system to monitor temperature changes when testing cars or electronics. This extra eye sees what the regular eye can’t.

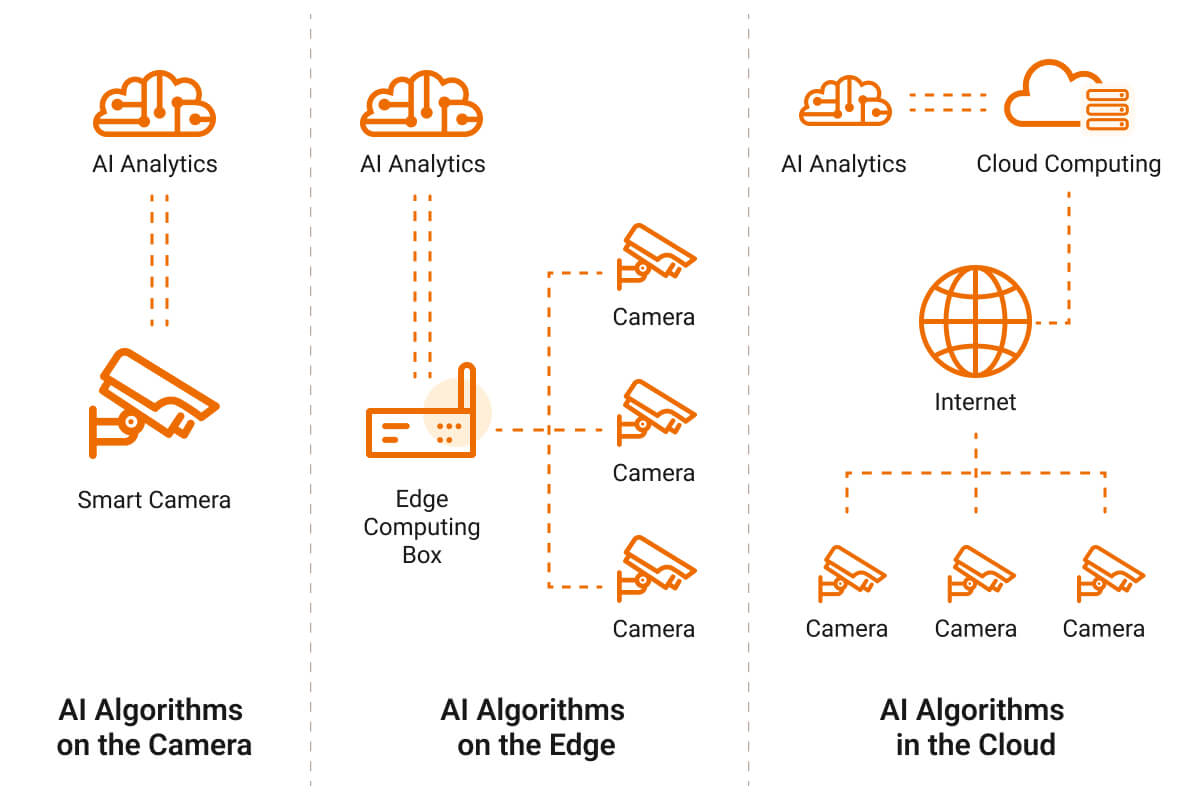

- Cloud Deep Learning: Robot vision can also provide tons of data for inferencing by deep learning algorithms in the cloud. Although there are latency and security issues associated with WAN, 5G and mMTC (Massive Machine-Type Communication) provide fast and secure communication to make cloud processing more feasible, making it possible to have “thin” robots.

Introducing Dusun IoT’s Embedded Vision AI SoMs

Dusun IoT’s most capable SoM for building robot vision is the RK3588J. In the robotic vision industry chain, this product falls under the upstream level (hardware section), so you can install the necessary software and interface it with the other required hardware to build the ROS computer vision system solution for your customers.

The SoM features a built-in 6 TOPS NPU to run the AI algorithms, and it supports large offline models, object recognition (including facial), monocular distance measurement, and flexible computation scaling to handle different tasks.

For data feeds, the SoM’s MIPI CSI supports up to 8 camera inputs and has USB ports to run the lighting features. The camera or arm supporting it also needs to move, and the RK3588J has CAN interfaces for precise motor control. This interface can also move the wheels and control the motor’s speed if the robot is portable.

Interested in the RK3588J SoM? Contact us for more details and check out more of its features here.